Machine Learning Experiment Tracking for Medical Software Development

Developing a machine learning (ML) model is usually not a one-time task that could be designed up front and then simply executed yielding the optimal solution. There are simply too many factors that can affect how a model works after it is trained to be able to choose the best ones a priori. These factors include: the data used, the algorithm or model architecture, model hyperparameters, training settings and many others. In fact, finding the right set of these factors using experimentation is an inherent part of developing a machine learning model. However, managing these experiments is a challenge in itself and this proces is called experiment tracking.

What exactly is experiment tracking?

Experiment tracking is the process of systematically recording and managing all aspects of machine learning experiments, including the data used, model configurations, training parameters, evaluation metrics, and resulting models. This process enables data scientists and ML engineers to compare different runs, identify what changes lead to performance improvements or regressions, and maintain a clear lineage of model development. By capturing metadata such as code version, hyperparameters, dataset version, and outputs, experiment tracking ensures that results are reproducible and transparent.

In collaborative or regulated environments—such as medical software development—experiment tracking becomes essential. It supports accountability, facilitates auditing, and aligns with standards that require traceability and documentation. There are multiple tools that help automate this tracking, integrating with model training workflows to store and visualize experiments in real time. Ultimately, experiment tracking enhances productivity, model reliability, and regulatory compliance by making the ML development process more organized and measurable.

Medical software regulatory compliance

ML experiment tracking plays a crucial role in ensuring that medical ML systems meet stringent regulatory requirements imposed by authorities like European Commission (MDR, CE Marking), the U.S. FDA (CFR 21, GMLP), and standards such as ISO 13485, ISO 14971, and IEC 62304.

The following table contains a short summary of the benefits of using ML experiment tracking system in the context of regulatory requirements for medical software.

| Regulatory Requirement | How Experiment Tracking Helps |

| Design History Files (CFR 21, ISO 13485, IEC 62304) | Logs every experiment, dataset, and model for traceability |

| Risk Management (ISO 14971) | Tracks performance variations, failure cases |

| Audit Trails (CFR 21, IEC 62304) | Maintains immutable records of model development |

| Software Verification (MDR, IEC 62304) | Logs test metrics and validation against clinical benchmarks |

| Reproducibility (GMLP) | Tracks environment, hyperparameters, and random seeds |

Using a validated ML experiment tracking system and integrating it into Quality Management System (QMS) can streamline compliance efforts.

What should be logged?

The complete list of things that should be logged using the experiment tracking system depends on the requirements for particular model (e.g. its purpose) and company profile. However, there are some categories of information that are worth recording in most of the situations:

- experiment ID, name, description,

- experiment type,

- timestamps for both the beginning and end of the experiment,

- data used, e.g. dataset ID, ground-truth version, ID of data preprocessing experiment ,

- model configurations, training parameters, evaluation metrics,

- author,

- source code version, including possible uncommited changes,

- environment, e.g. list of Python packages, machine name, OS, CPU, GPU,

- outputs or artifacts created as results of the experiment (e.g. model files, preprocessed data, evaluation metric values).

It is worth adding that usually in experiment tracking tools some experiment outputs can be stored as files (attached or referenced), but others, like metric values, can be directly added to the run data, so that they can be conveniently used for comparison of multiple experiments.

Experiment tracking tools

Manual experiment tracking is possible, however there are multiple tools that conveniently automate this proces making it much more lightweight and transparent. Most of them share a core set of common features that are essential for machine learning development workflows, including:

- parameter logging

- metric tracking

- artifact logging

- experiment versioning

- visual dashboards

- comparison of experiments

- tags, notes and metadata

- integration with ML frameworks

- team collaboration

- model registry

- API and CLI access

| Name | Key distinctive features |

| MLflow |

|

| Weights & Biases (W&B) |

|

| Comet |

|

| DVC (Data Version Control) |

|

| Neptune.ai |

|

| TensorBoard |

|

Our experiment tracking solution

At Graylight Imaging we developed experiment tracking system many years ago, based on our previous experiences. As we work with it in multiple different projects, we introduce changes that improve its usability. Our solution relies on the MLflow platform at its core, but our custom ML Framework (naming things is hard, as we know) determines exactly what we log and how we log it. What is specific to our solution, is the fact that we treat individual stages of processing (data preprocessing, training, inference, evaluation etc.) as separate, but linked experiments. Moreover, we group experiments primarily by dataset version (our dataset versioning system is a whole different topic) and experiment type (processing, training, inference, evaluation, debug). This improves navigation among multiple different experiments and also improves readability, in particular when comparing multiple runs.

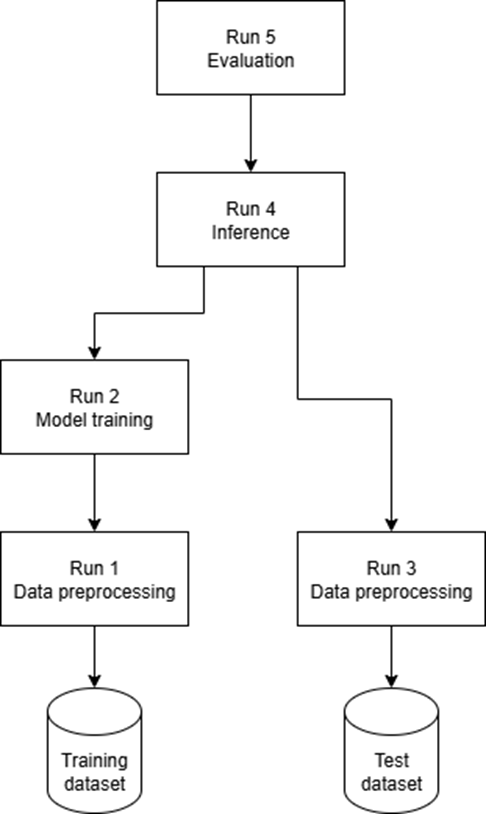

Another key feature of our solution is the fact that runs can use other runs as their inputs. Thanks to this, it is possible to re-create full tree of dependencies leading to some result. The picture below displays a simplified example of how some evaluation metric values can be traced back to each step that commited to them. Note that the direction of the arrows represent dependency, not the order.

This solution with fine-grained experiments works well for us as it helps in keeping track of each stage of ML model training pipeline and makes the results reusable.

Summary

Experiment tracking is a crucial practice in machine learning that involves recording parameters, metrics, code versions, and outputs to ensure reproducibility, transparency, and efficiency. In medical software development, it supports regulatory compliance by providing traceable model development and audit-ready documentation. The are multiple tools for experiment tracking that offer useful features such as parameter logging, artifact tracking, and experiment comparison to streamline collaborative and compliant ML workflows.

References

1. Food and Drug Administration, Health Canada, United Kingdom’s Medicines and Healthcare products Regulatory Agency. (2021). Good machine learning practice for medical device development: Guiding principles. https://www.fda.gov/medical-devices/software-medical-device-samd/good-machine-learning-practice-medical-device-development-guiding-principles

2. Food and Drug Administration (FDA). (2023). Title 21 Code of Federal Regulations (21 CFR), Parts 820, 11, and others. U.S. Government Publishing Office. https://www.ecfr.gov/current/title-21

3. European Parliament and Council. (2017). Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on medical devices (EU MDR). Official Journal of the European Union, L 117, 1–175. https://eur-lex.europa.eu/eli/reg/2017/745/oj

4. International Organization for Standardization (ISO). (2016). ISO 13485:2016 – Medical devices — Quality management systems — Requirements for regulatory purposes. https://www.iso.org/standard/59752.html

5. International Organization for Standardization (ISO). (2019). ISO 14971:2019 – Medical devices — Application of risk management to medical devices. https://www.iso.org/standard/72704.html

6. International Electrotechnical Commission (IEC). (2006). IEC 62304:2006 – Medical device software — Software life cycle processes. https://www.iso.org/standard/38421.html

7. MLflow documentation. https://mlflow.org

8. Weights & Biases documentation. https://docs.wandb.ai

9. Comet documentation. https://www.comet.com

10. DVC documentation. https://dvc.org

11. Neptune.ai documentation. https://neptune.ai

12. TensorBoard: Visualizing learning. https://www.tensorflow.org/tensorboard