From Voxels to Performance: Understanding Semantic Segmentation Metrics

Artificial intelligence tools become increasingly integrated into healthcare, with the semantic segmentation of medical images emerging as one of the most promising applications. A wide range of metrics can be used to evaluate the performance of semantic segmentation models, with each one highlighting different aspects of the models performance and its limitations. The proper choice of complementary metrics is crucial for the optimal model selection and for understanding its limitations. This is especially important in the healthcare sector, where errors can directly impact patient outcomes.

Confusion Matrix Based methods

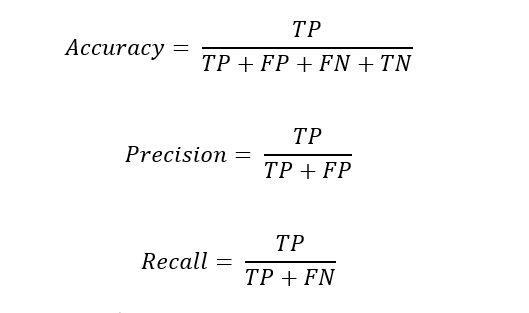

Classical metrics derived from the confusion matrix with its four components: true positives (TP), false positives (FP), false negatives (FN), true negatives (TN) remain applicable for evaluating semantic segmentation models. Each voxel falls into one of four categories (TP, FP, FN, or TN) and metrics such as:

can be computed. The most straightforward one, accuracy, measures the fraction of voxels that are classified correctly. In medical imaging, however, lesions or regions of interest often occupy only a small fraction of the image, making accuracy a misleading measure [1]. On the other hand, precision and recall focus on the foreground voxels: precision measures the fraction of found voxels which are truly relevant, while recall measures the fraction of relevant voxels that are successfully detected.

Dice Similarity Coefficient (DSC)

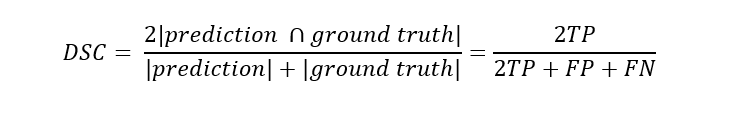

One of the most commonly reported metrics for semantic segmentation is the Dice Similarity Coefficient (DSC). It can be derived from the confusion matrix as well and is defined as:

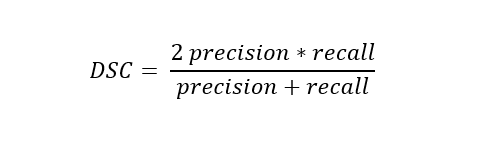

The DSC measures the overlap between the predicted and ground truth voxels. It accounts for both under- and over-segmentation errors (Fig. 1) and can be expressed as the harmonic mean of precision and recall, maximized when both are high:

DSC is sensitive towards the volume of the foreground, penalizing similar errors more heavily in smaller structures (Fig. 1). DSC is an example of an overlap-based metric, alongside others such as the Intersection over Union (IoU) and Volume Overlap Error (VOE).

Fig. 1: Dice Similarity Coefficient (DSC) for over- and under-segmentation cases and varying ground truth size.

Voxel-level vs object-level analysis

Voxel-level metrics are intuitive and straightforward to compute. However, they can introduce dataset-specific biases: for instance, when segmenting multiple objects of varying sizes, larger structures may dominate the results [2]. In such cases, object-level metrics can allow for a fine-grained analysis of segmentation performance.

Per-object metrics are calculated by comparing each ground truth object with its corresponding predicted object; typically selecting the predicted object with the highest overlap when multiple predictions match the same ground truth object. These pairwise metrics can then be used to derive binary detection metrics in which each whole object is classified as a true positive, false positive or false negative, commonly by considering predictions that exceed a predefined overlap threshold as true positives. [4]

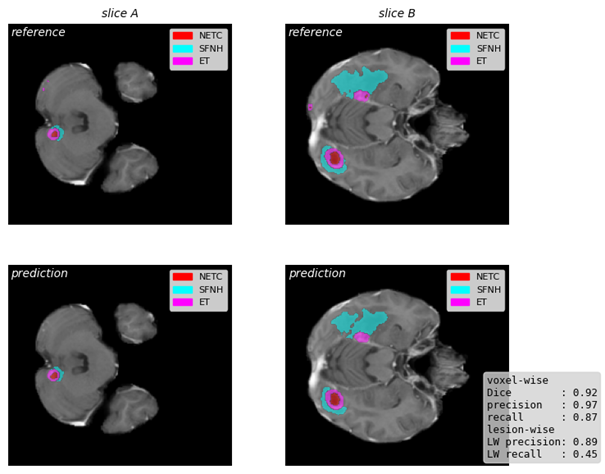

As illustrated by an example last year’s BraTS-METs challenge (Fig. 2), object-level analysis can reveal missed lesions in the otherwise accurate brain metastates segmentation (voxel-wise recall: 0.81, lesion-wise recall: 0.46). To find out more about our contribution to the challenge, see blogpost BraTS 2025 – Another Challenge Success for the Graylight Imaging Team.

Fig. 2 Exemplary segmentation of brain metastases using a model trained for BraTS-METs 2025 challenge, with corresponding metrics; data from challenge training dataset [5].

Boundary-based metrics

Metrics discussed above all depend on the count of voxels overlapping between prediction and ground truth, but are unaware of shape and smoothness of the segmentations. Therefore it is recommended to pair overlap-based metrics [3] with complementary boundary-based metrics [3], which focus on spatial alignment and distances between predicted and reference object contours. Examples include Normalized Surface Distance (overlap of boundary voxels within the accepted distance) or Hausdorff Distance (maximum distance between points from prediction and reference boundaries) [2].

References

[1] Müller D, et al. Towards a guideline for evaluation metrics in medical image segmentation. BMC Res Notes. 2022;15:210.

[2] Reinke A, et al. Understanding metric-related pitfalls in image analysis validation. Nat Methods. 2024 Feb;21(2):182–194.

[3] Kocak B, et al. Evaluation metrics in medical imaging AI: fundamentals, pitfalls, misapplications, and recommendations. Eur J Radiol Artif Intell. 2025;3.

[4] Machura B, et al. Deep learning ensembles for detecting brain metastases in longitudinal multi-modal MRI studies. Comput Med Imaging Graph. 2024;116.

[5] Maleki N, et al. Analysis of the MICCAI Brain Tumor Segmentation — Metastases (BraTS-METS) 2025 Lighthouse Challenge: brain metastasis segmentation on pre- and post-treatment MRI. arXiv. 2025. doi:10.48550/arXiv.2504.12527.